MUSIC4D – Project Overview

When emotion, artificial intelligence, and music merge

MUSIC4D is a multidisciplinary project that merges performing arts, artificial intelligence, and robotics in a creative synergy. The heart of the project is a system capable of perceiving the audience’s emotional state and generating musical responses in real-time, giving rise to a unique interactive performance.

Project Goals

💡 Emotional Interaction

Create a dialogue between the audience and the performance through emotion recognition.

🧬 Multimodal Intelligence

Integrate generative AI to interpret the audience’s voice, face, and behavior.

🎼 Musical Adaptation

Modify musical key, rhythm, and intensity in response to the emotional environment.

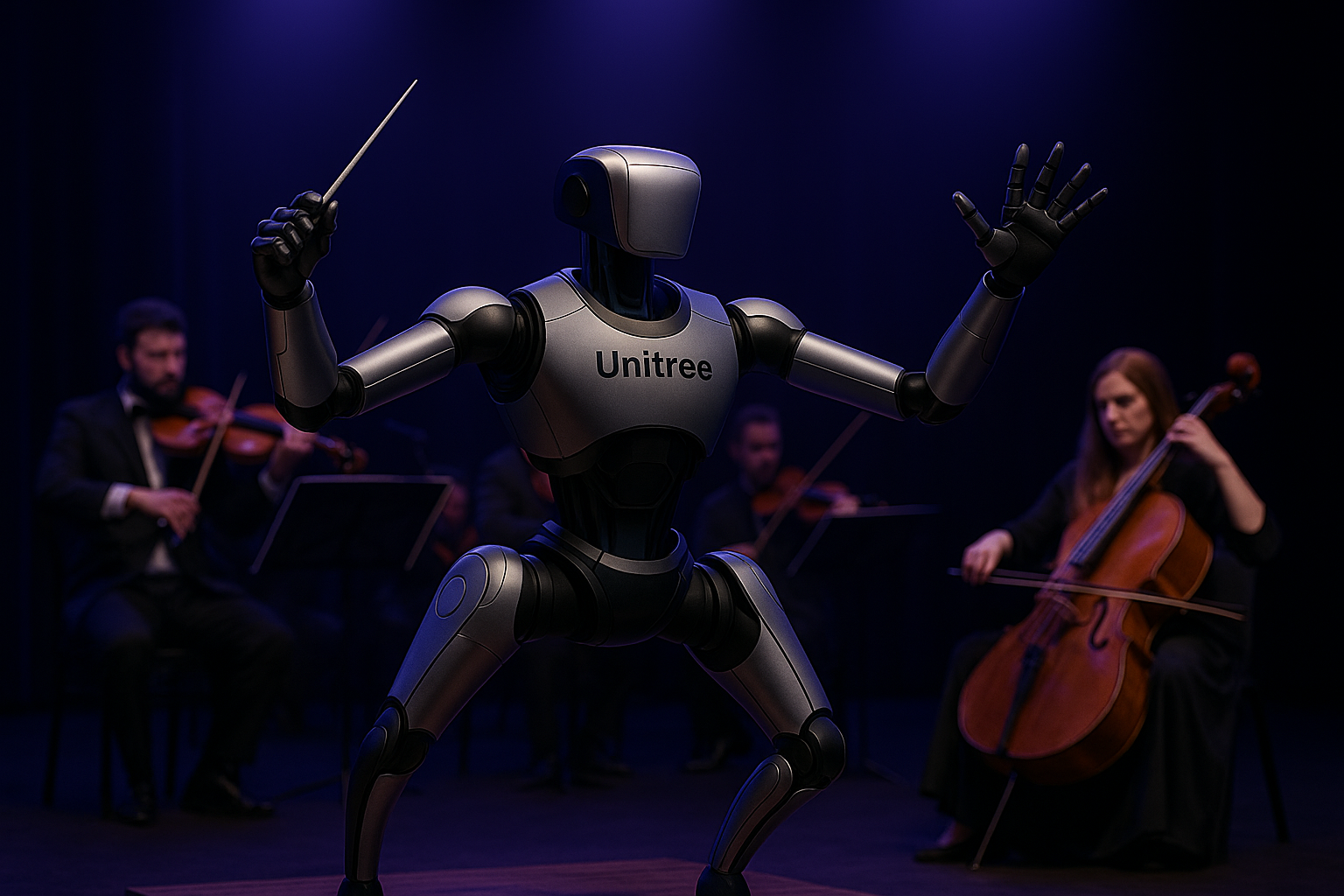

🤖 Robot Performer

Utilize a Unitree robot to amplify engagement in the performance.

Technologies Used

- ✅ VLM Models for multimodal recognition (images, text, voice)

- ✅ Algorithms for emotion analysis via deep learning

- ✅ APIs for dynamic, real-time music control

- ✅ Integrated sensors on Unitree robot

- ✅ NVIDIA Groot for advanced movement, perception, and autonomous control management.

- ✅ Integration with orchestral instruments and digital audio streams

🌍 Expected Impact

Music 4D redefines the role of technology in art. The project opens new scenarios in entertainment, immersive education, and cognitive-emotional therapy. Future applications include interactive shows and sensory installations on audience members for a double check between generative AI models and sensory data.

📅 Project Timeline

| Phase | Description | Status |

|---|---|---|

| Phase 1 | Study of AI models and prototype design | ✔️ Completed |

| Phase 2 | Integration of VLMs | ✔️ Completed |

| Phase 3 | Integration of “Tutti-bot” robotic framework | ✔️ Completed |

| Phase 4 | Testing with audience and orchestra | 🔄 In progress |

| Phase 5 | Final presentation and impact evaluation | 🕓 Planned |

“Emotion is not just listened to. It is interpreted, returned, and amplified.”