SPEME Lab is proud to share its latest research contribution, which pushes the boundaries of how humans and machines operate together.

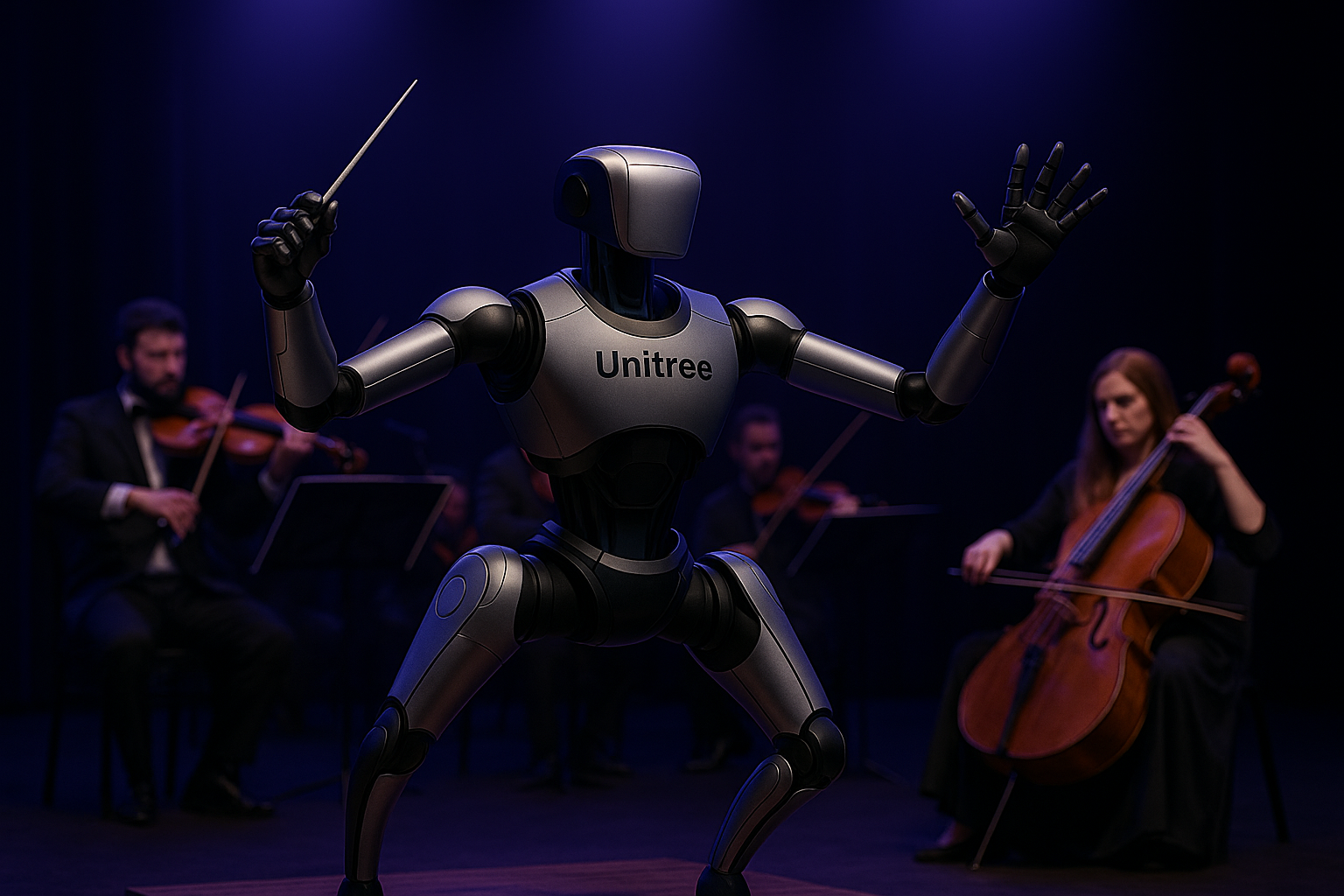

In the newly published paper, “Exploring Multi-Agent Human-Robot Teaming Dynamics in a Musical Orchestral Context,” the team explores a critical paradigm shift: moving beyond simple Human-Robot Interaction (HRI) and Collaboration (HRC) toward true Human-Robot Teaming (HRT).

Why an Orchestra?

The authors identify the musical orchestra as the ultimate testbed for complex dynamics. In this setting, precision isn’t enough; the system requires “entitativity”—the state where individual agents (human and robotic) feel and act as a single, cohesive unit.Authored by Rashmi Chawla, Francesco Pupo, and Giancarlo Fortino, the paper proposes a conceptual review that defines HRT through the framework of the “4Cs”:

• Communication

• Coordination

• Cooperation

• Command/Control

By integrating multimodal inputs (visual, auditory, and tactile), this research lays the groundwork for multi-agent systems where robots don’t just work alongside us—they play the same symphony with us.

Abstract:

The traversing of Human Robot Interaction (HRI) to Human Robot Teaming (HRT) has seen huge transformation from human-robots working in collaboration to working as teammates. The progress in automation, network connectivity and HRI represent a transformative shift to embrace Industry 5.0 by integrating advanced technologies like AI, robotics and IoT to create a symbiotic ecosystem where human ingenuity and machine intelligence converge. This paper outlines a conceptual review, to provide a well-defined understanding of Multi-Agent HRT that paves a new path from simple HRI to more evolved HRC and emergence of HRT in the subsets of well-defined 4C’s combining multi modal communication, multitudinous sensory modalities viz. visual, auditory, and tactile inputs as a part of HRI systems. This study also explores the onset of MA-HRT framework for a musical orchestra system workflow to demonstrate key features such as coexistence, collaboration, control and cooperation.