System Architecture Overview

High-Performance Edge Computing, Multi-Sensory Acquisition & Embodied AI

The MUSIC4D technical architecture is designed to handle complex real-time processing of multimodal data. It combines a powerful Edge Node infrastructure for heavy computation, a delicate sensory interface for environmental inputs, and state-of-the-art robotics for physical interaction.

⚡ Computational Core: Edge Nodes

At the heart of our processing capabilities are the Edge Nodes. These units are engineered to run sophisticated Deep Learning models (VLMs) and handle the “Tutti-bot” framework operations with low latency.

GPU Acceleration

To ensure maximum throughput for AI workloads, the nodes are equipped with high-performance graphics units. The architecture leverages the power of NVIDIA RTX PRO 6000 Workstations (or alternatively RTX A4500), providing the necessary CUDA cores for real-time inference, generative audio synthesis, and foundation model fine-tuning.

*Additionally, edge computing is supported by NVIDIA Jetson AGX Orin boards for localized robotic processing.

🎙️ Sensory Interface: Audio Acquisition

To interpret the “voice” of the environment and the audience, the system utilizes a tiered microphone setup managed by a Focusrite Scarlett 18i20 (4th Gen) multi-channel converter.

High-Fidelity Capture

For critical audio analysis requiring maximum detail, we employ the Schoeps Flexi Set CMC6 MK2. These microphones provide the transparency needed for delicate acoustic nuances.

Ambient & Versatile Capture

To cover a broader range of inputs and ensure versatility across the performance area, the system integrates Behringer B5 microphones for distributed audio sensing.

👁️ Visual Input

Complementing the audio, the visual perception system utilizes Sony FDR-AX43A (Night Vision) and Panasonic HC-VXF11EG 4K cameras. These feed high-resolution video streams into our VLM pipeline for emotion recognition and scene analysis.

🤖 Embodied AI & Robotic Actuation

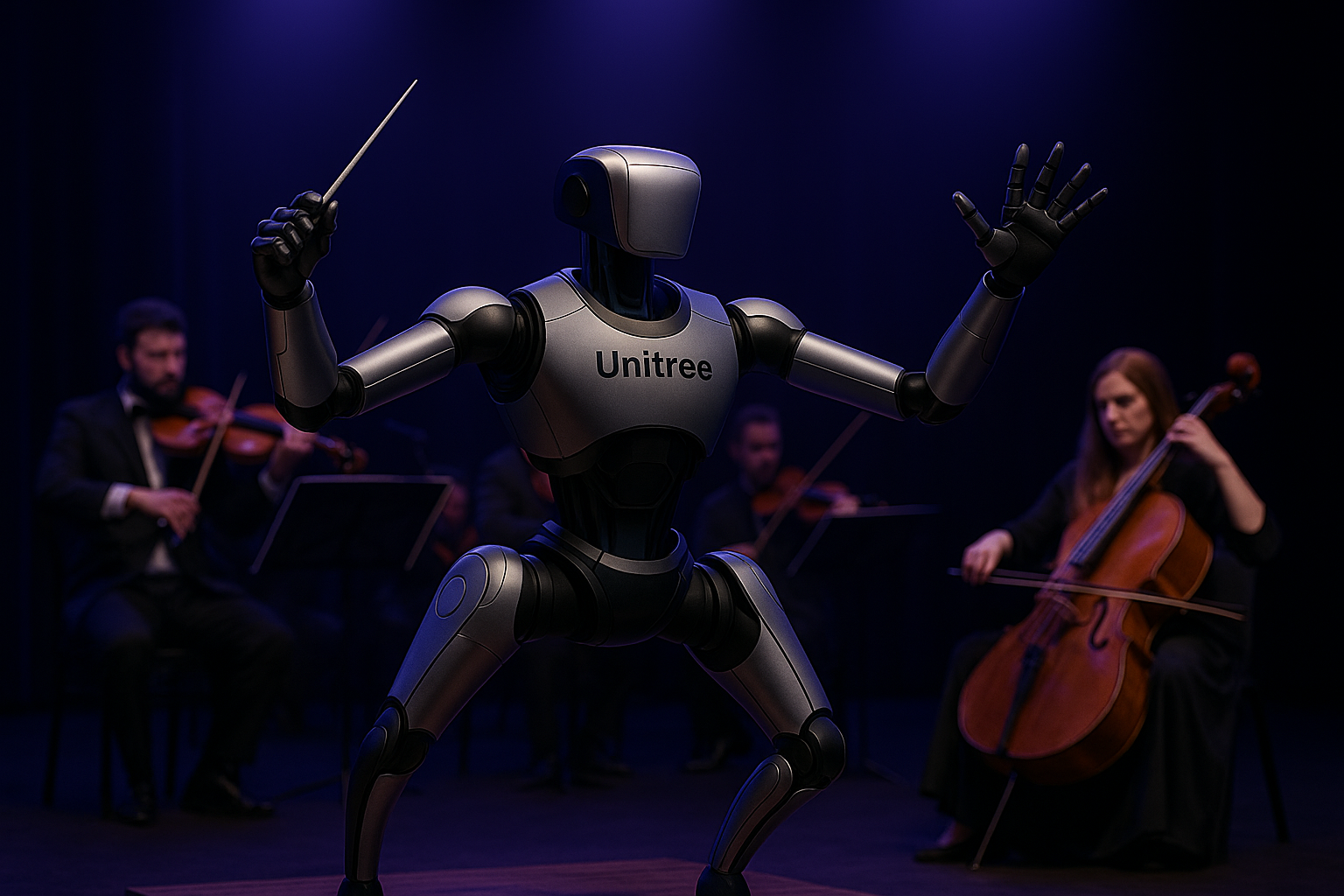

Transitioning from perception to physical interaction, the system integrates state-of-the-art humanoid robotics managed by generalist foundation models. This layer allows the “Tutti-bot” to inhabit the physical space alongside performers.

Unitree G1 Humanoid

The physical avatar of the system is the Unitree G1. This humanoid agent offers high-torque joint movement and agility, serving as the kinetic output for the generated behaviors. It translates the AI’s “emotions” into gestures and spatial navigation.

NVIDIA Project GR00T

Orchestrating the robot’s cognition is NVIDIA Project GR00T. This general-purpose foundation model enables multimodal understanding (language, video, and demonstration), allowing the robot to learn coordination and interact naturally rather than following hard-coded scripts.