The MUSIC4D project, approved within the framework of the “Notice for the granting of funding for the internationalisation of AFAM institutions,” represents a global initiative that achieved first place in score and order of funding among the projects approved by the Ministry of University and Research. Coordinated by the Palermo Conservatory, the consortium involves all AFAM institutions in Sicily (Agrigento, Catania, Caltanissetta, Messina, Trapani) and Sardinia (Cagliari and Sassari), along with the University of Palermo (scientific lead: Prof. Antonio Chella) and the University of Calabria (scientific leads: Prof. Giancarlo Fortino, Prof. Francesco Pupo), for a total of 13 partners and nearly 6 million euros in funding.

The project, named “Music, Entrepreneurship, Creativity, and Digital Revolution: The Future of Performing Arts in the AFAM system (MUSIC4D: Music, entrepreneUrshIp, Creativity, for the Digital revolution),” aims to promote and enhance the popular musical culture of Southern Italy internationally, using advanced digital tools to create multi-sensory environments and innovative artistic platforms. Over the next two years, all activities will take place in globally strategic areas: Australia, Latin America, Vietnam, the United States, China, the Mediterranean, and the South Pacific (Fiji Islands, Polynesia, Micronesia).

As the University of Calabria, we chose to support the project with an advanced technological component that not only integrates music and artificial intelligence but also explores the possible applications of emerging technologies in the context of the performing arts. This initiative aims to develop solutions that can be used both in academic settings and in artistic events and immersive installations. Within the project, the focus is not only on the implementation of new technologies but, above all, on the ability to transmit the musical cultural heritage of Southern Italy, adapting it to digital formats that can be globally accessible.

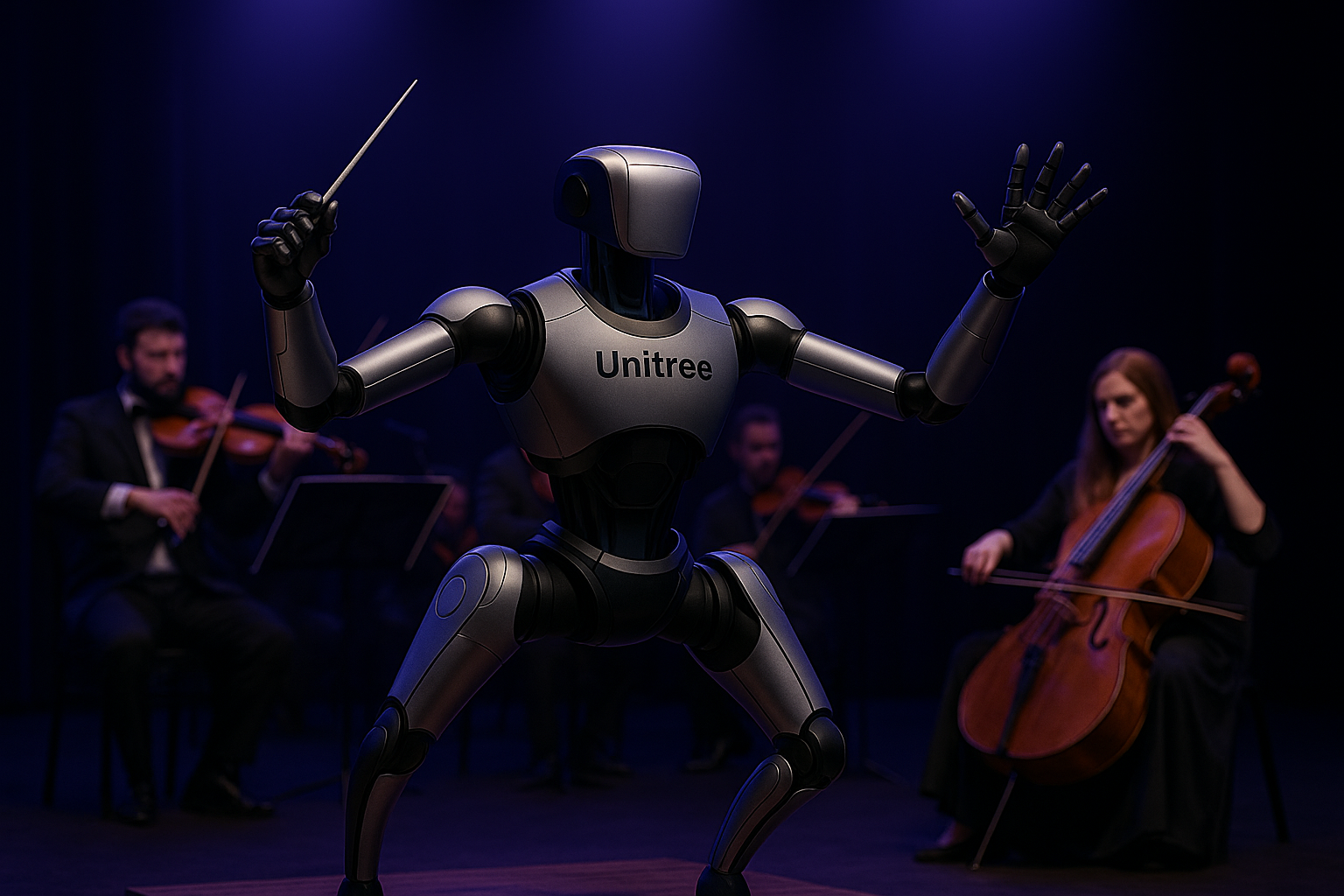

The project aims to develop an integrated system that combines large-scale deep learning models, advanced sensory capabilities, and a high-performance robotic platform. Central to this vision is the use of a robot capable of analyzing and processing, in real-time, complex signals from the surrounding environment, such as facial expressions, body movements, postures, environmental parameters, and other emotional indicators.

The computational component is based on models of 7-8 billion parameters, designed to ensure a balance between processing power and adaptability in dynamic environments. These models will be trained on datasets specifically created to capture the emotional and behavioral nuances relevant in a performative context, with the aim of providing refined and reactive interpretations of human emotions.

From a hardware and robotic perspective, the NVIDIA Groot N1 platform will be utilized, which allows for advanced management of movement, perception, and autonomous control. The integration between the data collected by the robot’s sensors (vision, posture, sound, environmental parameters) and the outputs generated by the deep learning models will allow the system to continuously learn and adapt to interaction with the environment and the people present.

This approach will not only allow for conducting an orchestra in a sensitive and adaptive way but also for creating new modes of interaction between technology and the audience. The robot will be able to modulate its behavior, gestures, and communication based on the emotional context, making each performance unique. From a broader perspective, the system represents an innovative way to unite artificial intelligence, musical expression, and the enhancement of Italian cultural heritage, exploring new forms of narration and engagement through the dialogue between human, machine, and art.